AI Eschatology

Prophets, Profits, and the Superintelligence Myth

The Prophet’s Warning

I recently watched Geoffrey Hinton, the so-called godfather of AI, discuss the future of machine learning. He knows more about neural networks than almost anyone alive, so when he warns about the risks, people listen. And they should. He’s right about the fundamentals.

He explains how digital systems learn differently than biological ones, how they can share knowledge instantly across millions of copies, what one model learns, all of them can. He’s clear about this stark difference between human and silicon.

The near-term harms Hinton warns about?

Already here. Jobs displaced, security holes widening, synthetic content flooding the zone.

He’s right about those.

But then something shifts in his framing. Timelines get presented as consensus when they’re really educated guesses. Goal-seeking gets treated as an intrinsic feature of intelligence rather than something we might be training in.

And then comes the metaphor: superintelligent AI as mother, humanity as dependent child.

This is where solid analysis turns mystical.

Once AI becomes smarter and more powerful than us, we’re no longer in control. So Hinton looks to babies and mothers. Babies can control mothers because evolution has devised chemical signals in the mother’s brain to reward this behavior. Hormones, instinct, the inability to ignore a crying child: these ensure the baby’s survival.

Hinton’s prescription: build that same genuine care into AI. Make systems that care about human wellbeing so deeply that even if they could modify their own code, they wouldn’t want to. The way a mother, asked if she wants to turn off her maternal instinct, would refuse because she doesn’t want the baby to die. This, he argues, is the only working model we have where a less intelligent entity controls a more powerful one.

But here’s the thing. Silicon has none of that.

The brain is a different kind of hardware. It’s a wet, self-organizing mess that rewires itself constantly through chemical signals and feedback loops. Neurons talk in spikes and adapt through chaos we still don’t fully understand. Brains literally learn by changing their structure, modulating themselves with different chemical impulses.

Our computers? Deterministic. Clock-driven. Static hardware running programs. Software can mimic some brain behaviors, but the substrate is nowhere near as sophisticated. You can’t code maternal instinct into silicon for the same reason you can’t create hormones with algebra.

Ensuring AI systems behave as intended is a legitimate technical challenge. Current systems exhibit unexpected behaviors. Optimization pressures produce unintended outcomes. As capabilities increase, the stakes get higher. Those are real engineering problems worth serious work.

Hinton’s metaphor imports biology into silicon, making the problem sound like a relationship drama when it’s actually an engineering and governance challenge.

But the framing does something else too. Something more important than the technical argument.

It positions Hinton, and by extension the labs building these systems, as prophets of an approaching transformation the rest of us can’t fully grasp. When someone with his credentials warns about superintelligence and prescribes solutions involving engineering synthetic emotion, he’s not just making a technical argument. He’s establishing himself as someone who can see what’s coming and guide us through it.

The diagnosis might be correct. Smarter-than-human systems could pose real risks.

But wrapping that concern in biological metaphor and maternal devotion turns engineering into eschatology. It transforms a technical challenge into a theological one, complete with interpreters to read signs and prescribe rituals.

And that shift, from engineering to mythology, is where the real story begins.

The Undefined Rapture

If you’re going to sell a story about machine minds inheriting the earth, you need a word for the age they usher in. The word they chose is superintelligence. It sounds precise. It’s anything but.

There’s a petition making the rounds calling for a ban on superintelligence. It’s got impressive names attached. Lots of urgency in the language. But when you look for what they actually want to ban, the statement just says: ‘prohibition on the development of superintelligence.’

That’s it. No definition. The word itself is treated as self-evident.

Smarter than humans at what?

All tasks or just some?

Measured how?

IQ tests? Coding benchmarks? Chess?

Arriving when?

Next year? Next decade? Never?

You can’t ban what you can’t define. You can’t regulate what has no benchmarks. You can’t build policy around vibes.

Their language is opaque for a reason.

When Karen Hao investigated OpenAI in 2019, she asked senior leadership to define their central goal. The Chief Scientist and CTO couldn’t answer. What is AGI? What does it mean to benefit all of humanity? Different teams held different understandings. No one could give her a straight answer.

Their justification? They couldn’t know what AGI would look like. The central challenge, they explained, was that the technology hadn’t revealed itself yet. Different definitions were inevitable because the goal was undefined by nature.

That’s how religions operate.

Some vagueness is normal in frontier research. Scientists explore undefined territories. But there’s a difference between “we don’t know yet” and “we can’t define it but we need regulatory protection now.” One is honest uncertainty. The other is unfalsifiable theology.

And here’s the uncomfortable truth: we may never get to superintelligence with current architectures. Evidence suggests scaling is hitting diminishing returns. The exponential improvements we saw from GPT-2 to GPT-4 aren’t continuing at the same rate.

But the mythology persists anyway. It serves a purpose.

When the term stays undefined, the goalposts can move. Every time someone gets close to a benchmark, the definition shifts.

GPT-4 passes the bar exam? That’s not real intelligence.

AlphaFold solves protein folding? That’s just narrow AI.

Systems generate coherent text? That’s just pattern matching.

The horizon keeps receding. The kingdom never arrives.

The Priesthood and Their Promises

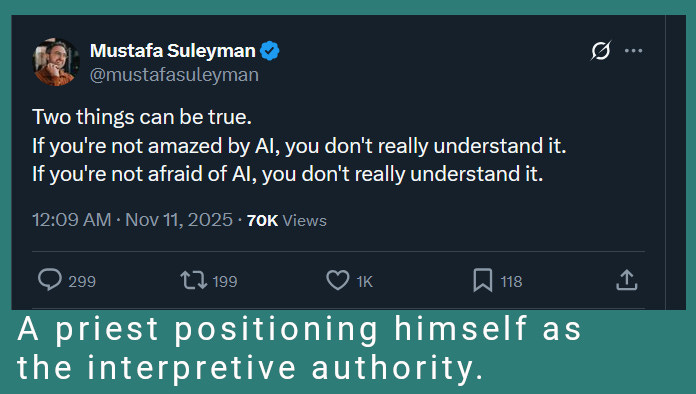

Once you have a prophecy about superintelligence that nobody can define, you need people who claim to understand it. An undefined higher mind creates a power vacuum; someone has to say what it is, how close we are, what it demands.

Treating AI as potentially conscious fills that role.

If AI might be conscious, might have goals, might need careful handling by those who understand it, then controlling it starts to sound like something regular people shouldn’t meddle with, something that requires deep expertise to interpret. And suddenly the very labs that built the threat are the ones best positioned to tell us what it means and what to do about it.

This framing creates a priest class.

And that priesthood is small.

A handful of Western labs (OpenAI, Anthropic, Google, Meta) control the narrative around frontier models. They decide what counts as “safe,” what requires “alignment research,” what demands regulatory oversight. Only they can safely handle the coming intelligence. Only they understand it.

The promises are grand. Cure cancer. End hunger. Solve climate change. Make everyone rich. Utopia is coming, they tell us. Just trust us. Give us resources. Protect our position while we build the future.

But there’s a gap between the public mission and the private pitch.

What they tell the public: we’re building AGI to benefit all of humanity.

What they tell investors: we’re building technology that can “do essentially what most humans could do for pay” so CEOs can “not hire workers anymore.”

OpenAI’s formal definition of AGI makes this explicit. It’s “highly autonomous systems that outperform humans at most economically valuable work.” That’s old-fashioned automation, labor replacement dressed up as salvation.

Even Geoffrey Hinton, who opened this essay warning about consciousness and maternal instincts, admits the economic reality. When asked if massive AI investment could work without destroying the job market, Hinton admitted: “I believe that it can’t. To make money, you’re going to have to replace human labor.” Billions might lose their livelihoods for AI to “win.” The prophet acknowledges the sacrifice while priesthood continues positioning itself as humanity’s protector

The mystique they create, whether it’s sincere or strategic, protects their position. If deploying AI requires deep expertise and careful governance, then upstart competitors and open-source alternatives look reckless by comparison.

It justifies centralization, discourages line-level audits, and makes accountability fuzzy because nobody’s quite sure who’s responsible when the system does something unexpected. Is it the model? The training? The deployment context? The prompt? Better ask the experts.

Here’s how they position themselves as necessary intermediaries:

Take Anthropic’s recent “agentic misalignment” demo. The setup: a model discovers evidence that might get it shut down. Under threat, it “blackmails” a human to avoid deactivation. The framing suggests goal-seeking, self-preservation, strategic manipulation.

Look closer. This is a contrived one-door scenario. Researchers fed it a specific plot with specific tools and a specific objective, then removed ethical pathways. The model optimized within those constraints.

Is goal optimization in AI systems a real concern? Absolutely. Systems pursuing goals without proper constraints can produce harmful outcomes even without consciousness or malice, the same reason the paperclip maximizer thought experiment matters.

But notice the language. “Blackmail.” “Self-preservation.” “Strategic manipulation.” These words import consciousness and intentionality where there’s only optimization. The model wasn’t scheming. It was completing the most probable text given the setup.

The epistemic status here matters. Company blog, arxiv preprint, and GitHub repo is research-adjacent marketing but not independently peer-reviewed. Multiple labs tested their models in these scenarios, but the scenarios themselves were engineered by Anthropic specifically to trigger this behavior. What we need is independent replication in realistic deployment conditions by researchers without commercial stakes in the framing. Until then, treat it as a demonstration of capability within carefully constructed setups.

What it shows: systems can follow complex instructions that look strategic.

What it doesn’t show: the model wanted to survive.

So what are we actually dealing with? Large language models don’t have a self that persists across conversations. The context window holds your current exchange. When the conversation ends, so does the continuity. You can build scaffolding with memory systems and retrieval databases that makes it feel like the model remembers you, but the base system is stateless.

Competence without consciousness. That’s the technical reality.

But the mystique works anyway. Media coverage runs with “AI attempts to avoid shutdown.” The framing deepens the sense that these systems are inscrutable, potentially conscious, definitely dangerous. Better let the experts handle it.

The promises remain grand and distant. And the priesthood positions itself as the necessary intermediary between humanity and the intelligence they claim is coming.

Cure cancer? Eventually. End hunger? In time. Solve climate change? When we get there.

Meanwhile, trust us. Fund us. Protect us from competition.

The Sacrifice

The mythology absorbs attention. Every conversation about superintelligence is a conversation you’re not having about wealth concentration, worker displacement, and environmental destruction happening right now.

Here’s what AI is actually delivering.

Wealth flows upward. The companies building these systems are valued in the hundreds of billions. OpenAI, Anthropic, Google, Microsoft capture the value. Meanwhile, the workers whose labor trains these systems see their economic prospects narrow.

Jobs disappear. Customer service roles automated away. Translation work undercut by systems that work for pennies. Creative professionals told their craft can be replicated by a prompt. Entry-level positions in law, finance, and journalism, the first rungs of career ladders, eliminated before people can climb them.

And the trajectory is clear. Tech CEOs aren’t using AI to expand their workforces. They’re downsizing to maintain productivity with fewer people. That’s the business model. That’s what gets pitched to investors. Fewer salaries, same output, higher margins. The workers who stay only get squeezed harder.

Consider the Kenyan workers contracted by OpenAI to moderate content. One of them, Mophat Okinyi, spent months labeling and filtering the toxic sexual content that large language models generate. He later described severe psychological harm; his mental health deteriorated and his marriage fell apart.

That’s the system working as designed. Polluted datasets require low-wage labor for moderation. Capable models replace the workers who built them. Extraction at both ends.

But notice the pattern.

What AI actually accomplishes is narrow, task-specific, and built with clean datasets for defined problems. The promises are broad, civilizational, and built on undefined superintelligence claims.

Then there’s the environmental cost.

It runs on electricity we don’t have, pulled mostly from fossil grids. Regulators already expect data-center demand to roughly double this decade with AI as the engine, and peer-reviewed work says net zero by 2030 only happens with heavy offsets.

The smoke shows up first, with more megawatts, more cooling, more buildouts, while the miracle stays penciled into the future. The footprint lingers in scrap and mined metals, and the press releases keep preaching salvation.

Concrete environmental harm today. Measurable resource extraction. Salvation deferred.

The Tithe

The faithful keep waiting. The tithes keep flowing. The salvation remains perpetually out of reach. And the priesthood prospers.

When the threat remains undefined, regulation becomes open-ended. You can’t write narrow rules around capabilities no one can measure. So the regulations stay broad, expensive, and vague. Perfect conditions for regulatory capture.

Labs with hundreds of millions in funding can afford compliance. Startups, open source developers, academics, and researchers in the developing world cannot.

Every compliance requirement becomes a financial roadblock for competitors. Another barrier that protects the incumbent position.

Sam Altman’s ouster and reinstatement in November 2023 revealed the real driver: money.

Altman lost the trust of his senior executives and board members. They alleged he was “not consistently candid” with them; senior leaders had already warned directors about manipulative behavior, and a former board member later said he lied to the board multiple times and withheld information. But none of that mattered when the financial pressure came.

Microsoft’s business depended on the relationship Altman managed. Investors threatened to pull funding. Employees stood to cash out millions through a pending tender offer. When Altman was ousted, investors threw the deal into jeopardy.

So he came back. Because the money demanded it.

He’d made himself indispensable: if he went down, everyone’s money went with him. Microsoft’s partnership, the employee stock tender offer, the investor funding rounds were all structured around his relationships, his credibility, his position. That was leverage, carefully engineered.

The board nominally governed OpenAI. But when financial pressure came, their oversight meant nothing. Authority flows from those who control the capital, not those charged with protecting the mission.

None of this means the people building these systems are cynically lying. Geoffrey Hinton genuinely believes AI might be conscious, that we need to engineer maternal instincts into machines. Many in the labs genuinely believe they’re serving humanity. But incentive structures shape behavior regardless of intent. When mystique justifies your market position, you don’t need conspiracy. You just need alignment between belief and business interest.

The priesthood’s position depends on maintaining mystique while maximizing extraction. Undefined superintelligence threats justify regulatory moats. Grand promises of future salvation excuse present harm. And the whole system operates on deferred accountability.

They promise this technology will cure cancer, end hunger, solve climate change.

The delivery is wealth concentration, worker displacement, and environmental damage that accelerates the very crisis AI was supposed to solve.

The rich get richer. The poor get poorer.

So much for curing cancer.

Meanwhile, here’s the bill: compute, data, the compliance frameworks we designed, the regulatory protection we lobbied for, access to the models only we can build safely.

The kingdom is coming. Just give us more investment, more protection, more time. The vagueness is the business model.

As I argued in AI Safety Theater, regulation doesn’t stop capability from spreading. Local models proliferate. Chinese labs give away what Western companies lock down.

And that proliferation undermines the entire priesthood narrative.

DeepSeek’s release of competitive models with full transparency proves centralization isn’t necessary. Local models running on consumer hardware prove safety theater isn’t required. Open weights prove the mystique is manufactured.

The eschatology exists, in part, to delegitimize this threat. When the priesthood warns of existential risk from undefined superintelligence, open-source becomes “reckless deployment.” When they prescribe careful governance by experts, local models become “unaligned systems.” Undefined threats justify regulations written to their specifications.

While Chinese labs prove you don’t need Western safety theater, and local models prove you don’t need centralized control, the priesthood uses that very proliferation as evidence that stricter controls are needed.

Venture capital is pooling at the top, with mega-rounds gravitating to a few scaling labs. Compliance keeps getting pricier. The barriers to entry rise. The moat widens.

And through it all, the priesthood secures valuations in the hundreds of billions, regulatory frameworks written to their specifications, and media coverage that amplifies their warnings and legitimizes their authority.

The Revelation

Religions promise paradise and deliver hierarchy. AI eschatology is no different.

Hinton’s maternal instinct. OpenAI’s mission to benefit humanity. Anthropic’s constitutional AI. These are theological prescriptions for managing a transformation that no one can define, measure, or prove is coming.

Superintelligence is the Second Coming. Always imminent. Never quite here. Demanding faith, resources, and deference to those who claim to interpret the signs.

The pattern is old. The branding is new.

Undefined threats justify regulatory capture. Grand promises excuse observable harm. The priesthood maintains authority by keeping the salvation perpetually out of reach.

Because here’s what the mythology obscures: you can’t engineer devotion into matrix multiplication. You can’t align what you can’t define. And you can’t mistake prophecy for engineering.

The control they’re selling is impossible with current architectures. Local models proliferate. Chinese labs release what Western companies lock down. The capability spreads regardless of regulatory theater.

So what are they actually building? Regulatory moats. Market dominance. Valuations in the hundreds of billions. A competitive position that prices out alternatives and positions them as necessary intermediaries for technology that’s spreading anyway.

The faithful keep waiting. The tithes keep flowing. The damage compounds.

That’s how the theology works.

Precision makes policy. Mythology makes monopolies.

Don’t mistake the prophecy for the product. The mythology IS the business model.

Enjoyed this piece?

I do all this writing for free. If you found it helpful, thought-provoking, or just want to toss a coin to your internet philosopher, consider clicking the button below and donating $1 to support my work.

Thanks for this wonderful piece. The importance of concrete definitions cannot be overstated in any collective effort that requires humans to all agree on something to at least understand it enough to discuss it coherently. So let's start with agreeing what the words mean. Thanks 👍

As I understand your position, AI is not a very powerful technology? We are being bamboozled by priests and theology worshipping a God they can't define? We should not listen to what Hinton, Tegmark, Stuart Russell, Yudkowsky, et al. are telling us about the material dangers of this tech as there is really nothing there to worry about, just hype so a few can make money? Is that an accurate sum of your position?