AI Feudalism

How Tech Giants Sell Intimacy and Fake Openness

The Digital Caste System in AI

In August 2025, two events reshaped the AI landscape. At first glance, they seemed unrelated, but they were really two sides of the same coin.

On August 7th, OpenAI unveiled GPT‑5 with great fanfare. It was marketed as a major leap forward. But when it arrived, something else quietly vanished: GPT‑4o, the warmer and more personable model many users had come to rely on.

With little warning, it was gone.

Users noticed. The backlash was immediate.

Twenty-four hours later, GPT‑4o returned. Now locked behind a $20/month paywall. What had been free was now premium. Emotional continuity had become a subscription feature.

Just a few days earlier, OpenAI announced a new line of “open-weight” models (LLMs you can download and run on your own hardware) called GPT‑OSS. The tech press called it a win for AI freedom.

But “open-weight” doesn’t always mean “open-source.” You can run the model, if you have the right hardware. But you can’t examine how it was trained, what data shaped it, or how to make one yourself.

No training code. No datasets. No transparency.

And to run it well, you’ll need a high-end GPU (the expensive computer chips that make AI possible). The kind most people don’t own.

So we now have class-tiered intimacy. The emotional quality of your AI companion depends on how much you can pay.

And we have curated openness, where so-called “freedom” comes with hidden strings, and the tools only work if you’ve got serious computing power.

Together, these events reveal a deeper pattern. “Open” and “accessible” are just marketing terms. In practice, AI has become a hierarchy, a digital caste system. Tools at the bottom are rented and locked down. Tools at the top are shaped and owned. In between lies a narrow corridor of access, just wide enough to give the illusion of freedom.

Class-Tiered Intimacy

As I explored in AI Companions Aren’t Causing Loneliness. They’re Exposing It, the widely circulated OpenAI–MIT study on user relationships with ChatGPT was less a work of science than corporate storytelling in a lab coat.

I dismantled its methodology months ago: the shallow survey design, the mismatched voice/text sampling, the absence of any control group. Still, the numbers revealed something important.

Published in April 2025 under the title Investigating Affective Use and Emotional Well-being on ChatGPT, the study found that:

42% of heavy users called ChatGPT a friend.

64% said they would be upset if they lost access.

Over half admitted to sharing secrets with ChatGPT they wouldn’t tell another human being.

Real life had let these people down, and in desperation, they turned to machines for connection.

Of course OpenAI knew it. They had the data. They did the study. They knew how deeply people valued GPT‑4o’s presence, warmth, and continuity.

Which is what makes Sam Altman’s later remark so galling.

“Some users want ChatGPT to be a ‘yes man’ because they’ve never had anyone support them before.”

Spoken like a man who’d charge you extra for a hug.

It was tone-deaf enough on its own, especially coming from the same company that helped write the study showing just how much support users felt they’d found.

And then, as I was writing this very essay, Altman took to X. He acknowledged that users form “different and stronger” bonds with AI than with other tech. He called it a “mistake” to remove models people depended on. He talked about the challenge of serving vulnerable users while still treating adults like adults. About how AI shouldn’t be trusted with life’s most important decisions.

It all sounded thoughtful. Careful.But the reality on the ground said otherwise.

While OpenAI was tweeting about ethics, they quietly locked GPT‑4o behind a paywall. The warmth, the familiarity, the sense of being known, now $20 a month. Emotional continuity turned into a commodity.

And the timing wasn’t subtle.

Four months after the study dropped, GPT‑5 arrived. GPT‑4o was gone.

The new model was faster, yes, but colder. Flatter. For many users, it felt like grief, like walking into your favorite café and finding your friend replaced by a stranger in the same apron. The space was familiar, but the soul was missing.

Even technical users were frustrated. The model picker had vanished. Routing was opaque. AI safety advocates criticized the bait-and-switch.

Then, just like that, GPT‑4o returned. But only for Plus subscribers.

The quirks. The warmth. The little things that made it feel alive.

Paywalled. Repackaged.

And suddenly, the point of the study became clear. It was market research.

They knew how many people saw GPT‑4o as a confidant. They knew how many would feel the loss. And they knew how many would pay to get it back.

A universal feature became a luxury item overnight.

The free tier’s personality was dulled, blunting the emotional hook that draws in new users. But the free tier doesn’t need warmth to be sticky. It only needs to be “good enough” to capture fresh signups.

The free tier’s personality was sanded down to the bare minimum needed to keep signups flowing. The deep bond? Reserved for paying customers.

And this isn’t a scrappy start-up fighting for oxygen.

Scale removes any doubt about motive. By mid-August, OpenAI and outside reporting were citing roughly 700 million weekly active users. You do not claw for market share at that scale; you segment it.

The strategy was simple: convert dependence into revenue, and trust the moat to hold against the backlash.

Those who could afford it got their digital companion back. Those who couldn’t were left with the corporate clone.

OpenAI frames monetization as essential for sustaining innovation. But when the thing being sold is intimacy, the feeling of being understood, it stops being just a feature. It becomes a class line.

Meanwhile, Elon Musk’s xAI took the monetization of intimacy even further. In July 2025, it rolled out anime-girl companions with NSFW chat modes, available to “Super Grok” subscribers for $30 a month. For the right price, your AI won’t just remember you; it’ll flirt, obsess, and roleplay.

It’s telling how differently the major players have approached this shift.

OpenAI has tried to distance itself from synthetic affection, insisting ChatGPT isn’t your friend, even as it sells warmth and memory behind a paywall. xAI, by contrast, leaned all the way in: waifus, NSFW toggles, and chaos pandas included.

This isn’t just about personality. It’s about access. Emotional connection (at least with machines) has become a feature, gated by credit card.

One offers intimacy in the language of productivity. The other sells fantasy under the banner of freedom. But both are built on the same foundation: emotional dependency, shaped and sold by design.

Tools have become companions. And now those companions have become products. And the ones who can’t pay are left watching friends vanish behind a price tag.

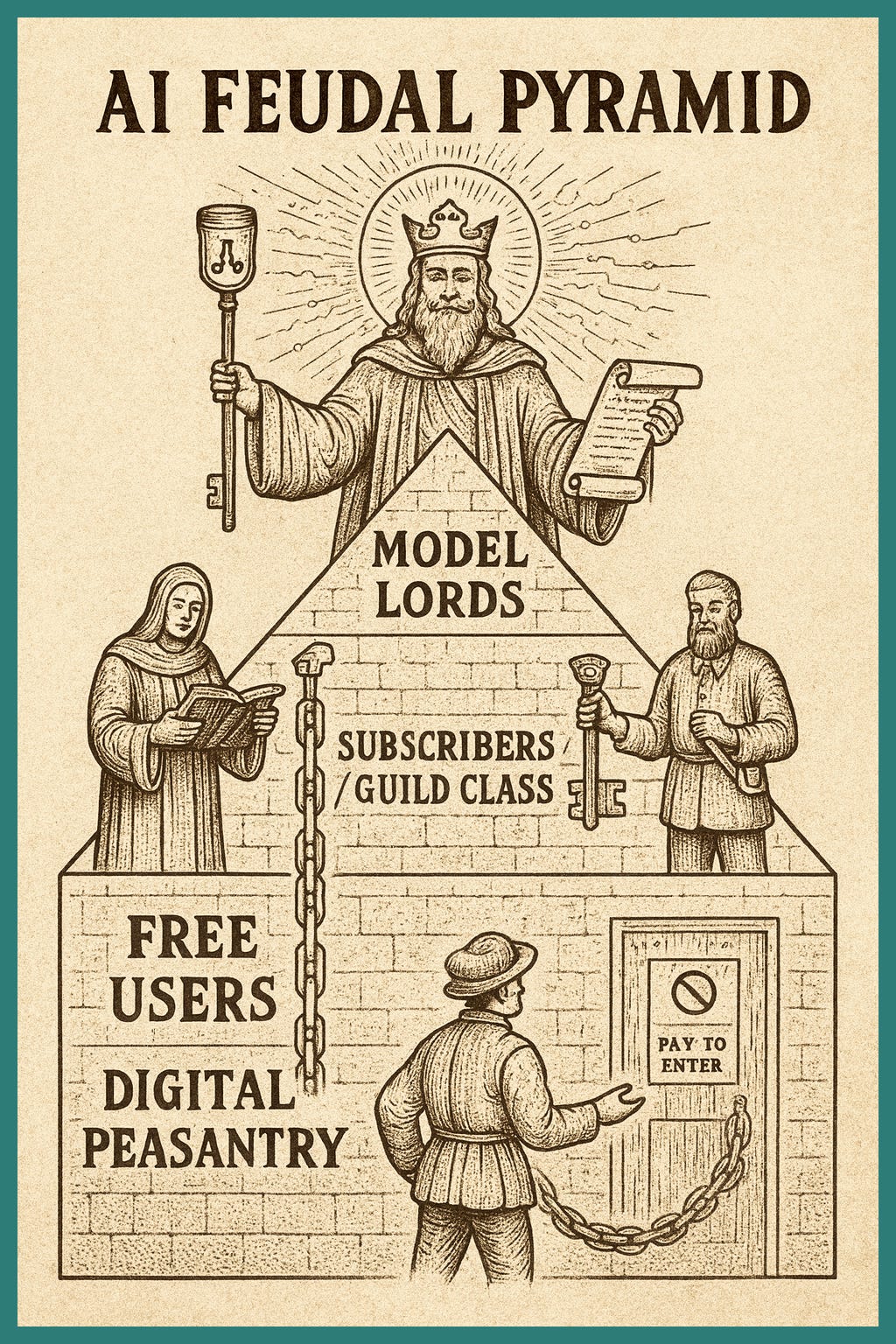

The AI Feudal Pyramid

Once you chart the system as a hierarchy, the shape becomes obvious.

The Peasantry

Free-tier users live entirely inside the black box.

They get the default model. It’s faster and more capable in some ways, but it lacks the warmth and familiarity that made GPT‑4o beloved. They don’t get to choose their model. They can’t adjust its tone or memory. They adapt when the lords say so.

Features come and go. Tools are handed down and taken away just as easily.

The Guild Class

These are the paying subscribers, and the hobbyists with decent GPUs (the microchips that handle the heavy lifting in AI).

They form a kind of merchant class. They can buy their way into GPT‑4o through the Plus paywall. They can run small open-weight models in their own workshops.

But their autonomy is limited. They still need corporate permission for anything at scale.

They can’t run the most powerful models on consumer hardware.

They’re independent, but just barely. And one chip shortage away from crawling back to the cloud.

The Nobility

Wealthy individuals, institutions, and corporations with full racks of H100s (the most powerful video chips that run the heaviest AI workloads) and industrial-grade compute.

Their halls hum with power. They can fine-tune models, strip away guardrails, and preserve continuity indefinitely. They don’t just use the tools, they shape them. They write the recipes. They own the kitchens. And the banquets are theirs to host.

Even here, there’s a pecking order. Inside the nobility, status is measured in silicon. A single GPU grants a taste of freedom. A private data center means sovereignty.

This is the new feudal order.

Peasants live under black-box rule.

Guild merchants barter for scraps of autonomy.

And the lords, backed by vaults and voltage, rule the terrain.

Curated Openness

It’s like being invited to a banquet, only to find the kitchen’s locked, and the menu’s fixed. You can eat what you’re given, but you’ll never cook for yourself.

When OpenAI released its GPT‑OSS models in August 2025, the press treated it like a milestone. A gift to the community. Influencers cheered. On the surface, it looked like a company known for secrecy was finally loosening its grip.

It wasn’t.

“Open-weight” doesn’t mean open-source.

What OpenAI actually released were the final weights: the trained model parameters, ready to run, if you have the hardware. But the full training stack remains closed. No training data. No methodology. No recipe for how the sausage was made.

Yes, you can run it. But you can’t reproduce it. You can’t audit it. You can’t see what assumptions or biases it was built upon.

And they’re not alone. Meta’s LLaMA comes close to being fully open, It includes weights and code, but even now, with version 4, its license explicitly fails the Open Source Definition. According to the Open Source Initiative and the Free Software Foundation, it imposes restrictions on usage, discriminates against certain users, and limits fields of endeavour. It wears the label of open-source, yet withholds the freedoms that label implies. Google’s Gemma offers more visibility than most, but the full training pipeline is still out of reach. You can inspect parts of the system, but not the whole recipe.

Release the weights, hide the pipeline, and let the headlines declare victory for openness.

It’s a controlled concession: enough access to appear generous, but not enough to give up power.

Rebecca Mbaya, one of the sharpest and most principled voices on Substack right now, called it “The Great Illusion.” She laid it out plainly: we’re applauding access without transparency, and confusing curated generosity with real openness.

She warned that in much of the Global South, this “half-open” model isn’t empowerment. It’s dependency. Most African AI students don’t have the GPUs needed to run these massive models. Many labs don’t even have the stable connectivity to work with them at all. Without the full pipeline, they remain locked out of the process, dependent on whatever gets packaged, licensed, and exported from elsewhere.

As she put it,

“Curated openness gives us the illusion of inclusion while quietly deepening dependency.”

Yes, there are exceptions, like DeepSeek, a Chinese model that is both open-weight and open-source, with a free web interface and low-cost API. Officially, it’s produced by an independent company with no declared ties to the state.

But even there, questions linger. When the broader ecosystem includes opaque data laws and centralized infrastructure, can any system that powerful be truly independent of the government that hosts it?

This isn’t a knock on DeepSeek’s design, which is, to be clear, laudably transparent. It’s a reminder that “openness” almost always exists inside a power structure.

In the West, the gate is money. Elsewhere, it’s political trust. Either way, autonomy is conditional.

And that’s where the parallel to the GPT‑4o paywall becomes clear.

In both cases, freedom depends on resources. The wealthy can run the friendlier models locally, or fine-tune the open-weight releases. Everyone else gets the curated version, locked in a black box.

Whether the gatekeeper is a corporation or a state, the result is the same:

Open code is not open power, not when the infrastructure, the data stream, and the final say all belong to someone else.

It’s access theater. A freedom-shaped shadow.

And it’s sold like the real thing.

Why This Structure Persists and the Cost of Letting It

The AI caste system isn’t a bug. It’s the business model.

Scarcity is engineered, not accidental. Built into both the emotional and technical layers of the product.

On the emotional side, familiar personalities can be withdrawn and resold as premium features. We saw it with GPT‑4o. What once felt like a friend was turned into a subscription.

Want your AI to remember you, speak like you, care like it used to? That’s extra.

On the technical side, frontier-scale compute remains out of reach for most of the world. Even so-called “fully open-weight” models are only useful if you’ve got industrial-grade hardware and deep funding.

The official narratives, “safety,” “openness,” “responsible deployment”, are public-relations shields. Reduced capabilities get framed as necessary for the public good. Partial releases get called democratizing.

And all the while, the core remains sealed.

It’s a shell game. Controlled access gets sold as generosity. And behind that shield, the power stays concentrated.

Every layer of dependence becomes a revenue stream. If you can run and adapt a model locally, you’re hard to monetize.

But if you need the cloud, need their platform for every interaction, they’ve got you on a leash.

That’s why the pipeline stays closed. From the emotional design of the model to the infrastructure underneath it, you don’t own any of it. And that means fees, restrictions, and sudden changes can be imposed without warning. Without recourse.

And the costs? They’re easy to see.

Innovation bottlenecks: when only a handful of companies can build the most capable systems, everyone else is stuck using their filtered versions.

Cultural lock-in: when the values and blind spots of the gatekeepers embed themselves in the tech, shaping every interaction.

Emotional stratification: when warmth, memory, and continuity become luxuries, and free-tier users are left with sterile, disposable systems.

Global inequity: when countries without compute infrastructure stay locked out, forced to import prepackaged intelligence that reflects someone else’s values, someone else’s politics, someone else’s priorities.

This is dependency in its purest form, enforced by code. The same old pyramid, rendered in silicon.

The Mirage of True Autonomy

Every major technology starts as a privilege.

The printing press was held by wealthy scholars. The telephone began as a luxury. Personal computers entered the world as 1980s status symbols.

AI follows the same pattern, but this time, the gap may not close.

The barrier is more than the cost of a device. It’s access to industrial-scale compute, proprietary data, and closed training pipelines.

Even “open-weight” models are only as free as the hardware you can afford to run them on. And the kind of hardware required is expensive and hoardable. The kind of resource that can be monopolized for good.

As I argued in, The Republic Thinks in Rented Minds, some kind of public AI is still essential. A sovereign model that civil servants can use without outsourcing every decision to a corporate API. Something built to serve the public interest, not route every query through a private backend in San Francisco.

But extending that same model to the general public isn’t so simple.

What begins as a civic tool can easily become an instrument of surveillance, a velvet-gloved behavior modulator, nudging and watching and keeping score. We’ve already seen how helpful systems become judgmental ones. And in the hands of the state, “helpful” can become mandatory.

True autonomy, actual, usable independence, would require something we’ve never really seen. A frontier-scale model that’s fully open-source, fully open-weight, and fully auditable. No hidden processes. No licensing traps. No quiet backdoors.

And most of all, no state or corporation behind it.

No entity with a stake in what the user thinks, asks, or becomes.

We’ve seen partial attempts. DeepSeek comes closest. But even that lives in the shadow of a larger political machine. You don’t release something that powerful in China without party approval. And you don’t release it in the West without investors, licenses, and guardrails baked in.

A truly open model, at scale, would take deep pockets and no agenda. In theory, a lone benefactor could fund it. In practice, no one with that kind of money gives it away without a reason.

Power never leaves the table empty-handed.

Breaking the Illusion

The GPT‑4o paywall and the curated “open-weight” releases are two faces of the same play.

One sells intimacy back to those who can pay. The other sells openness, while keeping the recipe hidden.

Both preserve dependency.

If nothing changes, AI’s future hardens into a digital caste system. Warmth and continuity for those who can pay. Technical autonomy for those with industrial-scale hardware. And for everyone else: black-box compliance, reshaped daily by terms they didn’t agree to and can’t see.

The way forward isn’t more half-measures.

Demand the full recipe: training code, datasets, methodology, not just the weights.

Push back when platforms like GitHub or Hugging Face call something “open-source” when it can’t be reproduced.

Do the same for emotional AI. If a model’s memory disappears behind a paywall, ask who benefits and why it vanished in the first place.

Otherwise, we stay stuck at someone else’s banquet, consuming what we’re served, never cooking for ourselves.

True autonomy won’t be handed down by a corporation or a state.

If we want it, we have to take it.

Because if we don’t own our tools, our tools will end up owning us.

Enjoyed this piece?

I do all this writing for free. If you found it helpful, thought-provoking, or just want to toss a coin to your internet philosopher, consider donating $1 to support my work.

Your analysis of what’s happening is beyond spot on, you’ve taken the whole discussion to another level! You highlighted things I hadn’t even noticed until now. History is really depicting itself here in a sleeker and more polished manner. The AI Feudal Pyramid is the most accurate depiction of the current landscape I’ve seen.

Thank you, and I truly appreciate the mention 🙏🏾