The Republic Thinks in Rented Minds

On Sovereignty, Surveillance, and the AI We No Longer Own

The Illusion of Control

On July 23, 2025, the White House released a trio of executive orders: one to fast-track datacenter construction, another to promote American AI exports, and a third to purge “woke” ideology from federal models.

The America’s AI Action Plan was unveiled alongside the orders, a neatly choreographed reveal.

The infrastructure push made sense. The export strategy was expected.

But the “woke AI” order stole the spotlight.

It targeted large language models (LLMs) trained on values like diversity, equity, and inclusion. It demanded historical accuracy, scientific objectivity, ideological neutrality. No critical race theory. No systemic bias. No preferred outcomes.

What it didn’t demand was ownership.

To a careful reader, this was a confession.

The real scandal isn’t AI’s political lean. It’s that the U.S. government doesn’t own the systems it relies on. It doesn’t build them, doesn’t host them, doesn’t even set the terms of service.

When federal workers use commercial LLMs (whether ChatGPT, Gemini, Claude, or Grok) their prompts are processed through infrastructure the Republic does not control.

Moderation layers, filter logic, data retention: all governed by private platforms with their own incentives, liabilities, and unseen priorities.

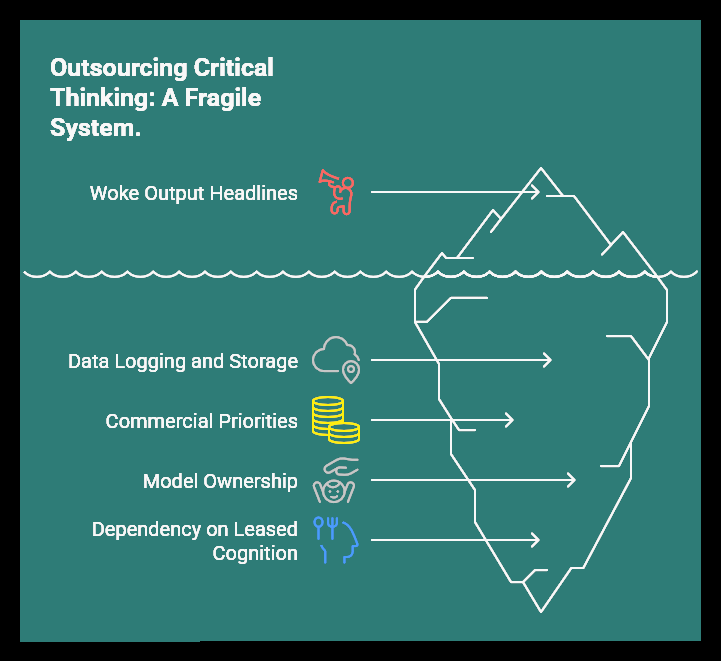

The Republic is outsourcing critical thinking to rented infrastructure.

And like any rental, it comes with rules. Prompts are logged. Interactions are stored. Deleted chats aren’t really deleted. What comes back is filtered through commercial priorities and legal caution. Insight takes a backseat to safety.

So-called “woke” output gets all the headlines, a lightning rod for critics and culture warriors alike.

But that bias, real or not, is only the surface. The deeper layer is who owns the model, sets the defaults, applies the filters, and controls the archive.

This essay is about the basic premise of government thinking. You cannot govern what you do not control. You cannot act independently while leaning on leased cognition moderated by external entities. No government should make policy inside a black box it doesn’t own.

I’m going to show how deep the dependency goes. How fragile the system is. How far behind the U.S. has already fallen.

And why it’s time, finally, for the Republic to think with a mind of its own.

Dependency on Closed Systems

The federal government uses large language models. It’s written into policy.

The July 2025 executive order, “Preventing Woke AI in the Federal Government,” didn’t name vendors, but it left no doubt: agencies are procuring LLMs, using them to generate content, and reacting to the ideological lean of their outputs. The order makes demands, defines terms, and outlines how agencies should use AI. It formalizes what’s already happening.

Every LLM query generates data: prompts, context, operator input. And for closed models like ChatGPT, Gemini, or Claude, that data doesn’t stay local. It travels through proprietary infrastructure and is stored, by default, in corporate cloud systems the government doesn’t control.

In May 2025, a federal judge ordered OpenAI to preserve all user interactions, retroactively, indefinitely, even deleted chats. I documented the case in The Paper and the Panopticon. That ruling didn’t just change how one company handles data. It exposed a systemic design failure.

Because if every prompt is preserved, then every prompt can be breached.

If a chat window becomes a deposition, then every public servant, every government employee who ever used an LLM may already be under virtual subpoena.

And one such archive, by court order, already exists.

Draft legislation. Internal memos. Raw policy language. Unpublished decisions. Everything a government employee has ever typed into ChatGPT is now stored on OpenAI’s servers, ready to be subpoenaed, sifted, or silently extracted.

On June 6, OpenAI filed a motion to vacate the preservation order, calling it an “overreach” that conflicted with its privacy commitments. But the New York Times lawsuit had already forced the company into a corner, where the full implications of its data retention practices became publicly indefensible.

In July, U.S. District Judge Sidney Stein denied OpenAI’s appeal.

The ruling stood: retain everything. Deleted chats included.

While the New York Times lawsuit placed OpenAI’s data practices under a unique legal microscope, this vulnerability is not unique to a single company. The fundamental risk lies with any closed, commercial model. Every query submitted to Google's Gemini, every draft composed in a cloud-based AI suite, is subject to the provider's terms of service, data retention policies, and internal infrastructure. The specific legal threat may differ from vendor to vendor, but the core issue remains.

The Republic’s thoughts, whether typed into a chat window owned by a startup or an established tech giant, are still being processed and stored on servers it does not control.

Privacy attorney Jay Edelson has warned that while OpenAI may have stronger security than most firms, the real vulnerability now lies in who handles the data next.

“Lawyers have notoriously been pretty bad about securing data,” he said. “So the idea that you’ve got a bunch of lawyers who are going to be doing whatever they are with some of the most sensitive data on the planet and they’re the ones protecting it against hackers should make everyone uneasy.”

With billions of preserved chats in play, privacy experts warn that users’ most personal conversations, once presumed ephemeral, are now subject to discovery, mishandling, or breach. And while enterprise customers appear to have been exempted from the order, ordinary users have had no say in the matter.

“The people affected,” Edelson said, “have had no voice in it.”

After the court order, Sam Altman, OpenAI’s CEO, discovered a conscience.

In a subsequent interview, he warned that users, especially young people, are treating ChatGPT like a therapist, sharing deeply personal thoughts, relationship troubles, even confessions. But unlike therapy, there’s no legal privilege. No confidentiality. No protections.

“So, if you go talk to ChatGPT about your most sensitive stuff and then there's like a lawsuit or whatever, like we could be required to produce that.”

He called it “screwed up.”

And he’s right to be worried. State-backed hackers have breached supposedly secure infrastructure for far less. There’s no way of knowing whether those logs have already been exfiltrated. But we can be certain of this: chat histories from government officials, believed to be private, are an irresistible target for nation-state intelligence.

None of this appears in the America’s AI Action Plan.

It praises public-private partnerships. It calls for innovation, competitiveness, and access.

But it says nothing about data retention or legal exposure.

Nothing about the national security risks of offloading cognition to servers governed by corporate legal obligations.

No discussion of foreign interception. No reckoning with the broken trust between AI vendors and institutional users.

The plan talks about safety.

But it means output safety: whether a model says something toxic, problematic, or politically inconvenient.

It says nothing about input safety: the protection of queries, drafts, and decision-making processes.

No answer to what happens when the government’s thinking becomes readable, subpoenaable, or exfiltrated.

And when the cracks begin to show, even memory is managed.

The Action Plan instructs the Federal Trade Commission to review and potentially revoke past rulings that “unduly burden AI innovation.”

The Panopticon is no longer metaphor.

It’s a backend feature: always watching.

And it’s collecting everything. Building a paper trail. Capturing what you meant to say, what you almost said, what you thought you deleted.

That trail is now permanent. And searchable.

The Republic is training on borrowed brains.

But someone else is saving the dreams.

The Global AI Arms Race

As I outlined in BRICS, Lies, and LLMs, the global AI race has moved beyond theory. It’s now strategic, state-backed, and accelerating.

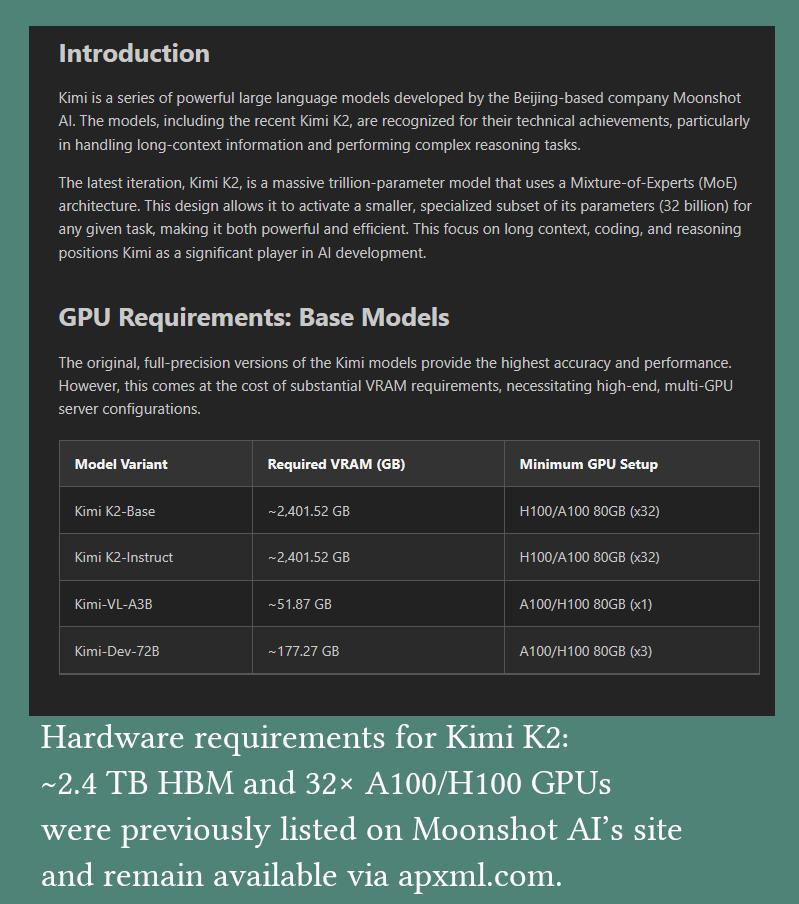

China is leading the charge. It has released frontier-level open-weight models like Kimi K2 and DeepSeek-R1, fully free, fully capable, and competitive with anything American firms keep behind a subscription plan. These aren’t research toys. These models can run independently, on domestic hardware. Once released, they answer to no one: not Beijing, not Washington. Just the person behind the keyboard.

Other BRICS nations (Russia, India, Brazil, South Africa) aren’t far behind. And the quiet nations (Iran, North Korea) are almost certainly building, too. For control. For independence from Western influence. Openness and commerce are secondary or irrelevant.

Meanwhile, the United States still believes it can dominate cognition without owning it.

Western labs have long framed open-weight models as a danger: citing safety, alignment, extremism, and biosecurity.

But those warnings wither when the market shifts.

With China flooding the field with open-weight, deployment-ready models, even OpenAI is now preparing to release one of its own.

Google, for its part, has already released a line of open-weight models. The Gemma family. But its openness is bounded. The weights are public, but the license is restrictive, the training data opaque, and the preferred deployment path leads straight back to Google Cloud. It’s openness as posture, not principle.

These releases are gestures, meant to deflect criticism rather than decentralize cognition. Gemma functions as a reputational hedge, part of a broader campaign to appear open without surrendering control. Even in open-weight attire, the frontier remains behind glass.

So America’s AI strategy amounts to outsourcing innovation to a handful of private labs, each guided by its own business model, compliance stack, and internal politics.

OpenAI, Anthropic, and xAI form a loosely aligned bloc: backed by overlapping investors, united by alignment rhetoric, and protected by lobbying arms.

Google stands adjacent. More performance-driven, less evangelistic, but still a corporate custodian of public thought.

Together, they’ve built platforms that define trust privately and distribute access selectively, while branding control as responsibility.

The America’s AI Action Plan calls artificial intelligence a “geostrategic asset.” It acknowledges the stakes.

It even praises open-weight models, citing their value for research, security, and business adoption.

But when it comes time to act, it steps back.

No direct investment.

No sovereign infrastructure.

Just a promise to “create a supportive environment” and hope the private sector builds something worthy of the Republic.

“We need to ensure America has leading open models founded on American values.”

“The Federal government should create a supportive environment for open models.”

—America’s AI Action Plan, July 2025

That’s abdication wrapped in patriotic language, not strategy.

The plan gestures at openness, but its motives are industrial, not ideological.

“Open” has become a talking point. A sales funnel. A shield for industrial policy disguised as freedom.

If China deploys a sovereign model for governance by 2026, running on Chinese silicon, trained on Chinese history, shaped by Chinese ideology, will the West still be arguing about GPU quotas?

The race is already underway.

And the Republic still thinks it’s leasing productivity software.

Capitalism Is the Bottleneck

The U.S. didn’t build minds. But it built the groundwork (satellites, weather models, census engines) and then handed the job over. It let private firms decide what those systems mean and what they’re for. Now, even parts of the foundation are slipping away.

Policy builds nothing. It points to private platforms and calls it progress.

This comes with a quiet trade-off:

• trust in markets over mission;

• regulations shaped by the companies being regulated;

• accountability outsourced through procurement contracts.

We didn’t just skip the work of building our own systems. We lost control over them.

Now, when the government needs to think, it doesn’t reach for its own tools. It opens a private chat window and hopes for the best.

The America’s AI Action Plan has now enshrined this approach in a document with no force, no teeth, and no shame.

Its centerpiece? A proposal to create a “financial market for compute.”

Think of it less as public infrastructure and more as a casino for rich people to gamble on AI’s future.

Instead of building sovereign models on sovereign hardware, the plan envisions a GPU futures exchange, where startups “bid for thought.”

This has nothing to do with strategy, and everything to do with monetizing access. And it leaves the Republic with no wisdom gained. Just a smoother path to dependence.

The bottleneck is economic.

We didn’t lose the frontier because we lacked imagination.

We lost it because we tried to rent the future instead of building it.

We’ve been here before

In the 19th century, railroads became critical infrastructure.

But instead of building them as public goods, the U.S. outsourced the job to barons (Vanderbilt, Gould, Huntington) who extracted profit, dictated routes, and reshaped the country’s geography to fit their balance sheets.

We’re doing it again. Only now, the tracks are made of tensors, and the trains carry our questions, our data, our thoughts.

OpenAI. Anthropic. xAI.

Different logos, same investors, same race: to dominate inference and set the terms of tomorrow’s thought.

Google funds itself, but the business model doesn’t change: Proprietary logic. Monetized cognition.

And the public rides in the dark.

And some still think the answer is switching seats.

Why Grok Isn’t the Answer

The tools are scattered.

One judge might use Gemini.

A staffer in the Department of Energy might be testing Grok.

A congressional aide types legislative queries into ChatGPT’s sidebar.

There’s no policy. No perimeter.

Just sovereign decision-making scattered across commercial chatboxes, each with its own filter, retention logic, and hidden observers.

This is what incoherence looks like.

Left to markets, cognition fragments.

Each platform shapes results in ways the Republic can’t audit or override.

It’s not just dependence. It’s disintegration.

When Elon Musk launched his LLM as a “free speech” alternative to OpenAI, many hoped it would shift the landscape.

It didn’t.

It simply rerouted traffic to another walled garden: different moderation, same closed model.

Musk isn’t offering an independent system. He’s offering only the illusion of defiance, running on infrastructure he still controls.

The choice isn’t between OpenAI’s perceived “wokeness,” Google’s corporate caution, or Musk’s performative rebellion.

It’s between flavors of corporate oversight, each with its own telemetry, terms, and policies.

This is market segmentation, not freedom.

Swapping billionaires doesn’t change who’s in charge.

It just changes the logo on the interface.

The GPU Wall

And all of this assumes supply can meet demand.

Frontier-scale models are pushing the limits of hardware. In one recent case, China’s Kimi K2 reportedly requires ~2.4 terabytes of high-bandwidth memory to run uncompressed at full scale. That’s a supercomputing dependency, not a research toy.

Multiply that across hundreds or thousands of instances, and the strain moves from balance sheets to the entire hardware pipeline.

U.S. labs don’t disclose the hardware requirements of their largest proprietary models, but it’s safe to infer that their needs are comparable or greater. GPT‑5, built on o3, surpasses GPT‑4o and is built for cloud-scale inference.

Meanwhile, Elon Musk has publicly stated that xAI’s goal is 50 million H100-equivalent compute units online within five years: a figure so surreal it feels less like planning and more like prophecy.

And that hardware? It still comes from offshore, for now.

TSMC, the Taiwan Semiconductor Manufacturing Company, produces the majority of the world’s most advanced logic chips.

Even with over $165 billion committed to building fabs in Arizona, the core investment and control remain with TSMC.

Subsidies may help fund the facilities, but the engineering, ownership, and strategic command still live offshore.

The same “American” GPUs celebrated in the Action Plan are manufactured by a foreign-owned firm headquartered 100 miles off the coast of China.

The chips are American in name only. Engineering and strategic control remain offshore, even as production begins to migrate.

To be clear, bringing TSMC to U.S. soil was a shrewd move.

It ties the foundry kingpin to the American economy. It buys leverage. It makes the U.S. indispensable to global cognition.

But we bought proximity, not autonomy.

The chips are going to be made here.

The command still lives abroad.

The chips are going to be made here.

The command still lives abroad.

Even TSMC can only make so many chips. Its fabs are running near capacity, and each new node pushes the edge of what's physically and economically viable. GPU manufacturing is already hitting the wall.

We spent decades offshoring production for profit.

Now we simulate control through domestic facilities we don’t fully command and call it strategy.

And the Action Plan’s response?

Not smarter design. Not leaner models. Not public architecture.

Just “financial markets for compute.”

As if cognition were a commodity.

As if scale were infinite.

As if power grids, foundries, and physics weren’t already stressed to capacity.

Open-Weight Models: The Tor of Cognition

Open-weight models, LLMs whose underlying code and parameters are fully public, can be very dangerous.

They can be misused: prompted for biohazards, weapon designs, deepfakes, radicalization. They can be fine-tuned into ideological engines. They can be run without oversight, modified without permission, and distributed without control.

But banning them doesn’t eliminate the danger. It just narrows who gets to use it.

The same governments and corporations calling for “responsible AI” are helping private firms build black boxes: systems no citizen can examine, no researcher can replicate, and no rival can run.

Because when weights are open, so is the possibility of a rival intelligence, one they can’t gate, throttle, or revoke.

This isn’t the first time a tool of emancipation has been treated like a threat.

Strong encryption was once classified as a munition.

The printing press destabilized monarchies.

Tor, the backbone of the so-called dark web, was developed by the U.S. Department of Defense to enable anonymous online communication, and still receives government funding. It opened the internet’s back alleys to both whistleblowers and predators.

In each case, the response was the same:

Control the tool. Shape the narrative. Call it safety.

Today, anyone can download Tor. Anyone can use strong encryption.

The tools built for clandestine communication now power global dissent.

Open-weight models aren’t a utopia.

They’re a distributed arms race of cognition. They’re infrastructure: shared, forked, studied, and reclaimed.

They’re the first models that independent actors can audit, replicate, and run on hardware they control. They restore a measure of symmetry, between user and model, between those who govern and those who are governed.

In a world where centralized LLMs are shaping news, law, culture, therapy, education, and policy, open models are the only countermeasure left.

Yes, they can be abused.

So can closed models. So can secrecy. So can power.

Pluralism isn’t safe.

But it’s better than submission.

What Sovereignty Looks Like

If the Republic truly wanted a mind of its own, it would build one.

Not another "public-private partnership." Not a compute voucher. Not an API login wrapped in patriotic branding.

An American model. On American owned hardware. Governed by public law.

Call it LLM.gov.

It doesn't need to be open-source.

It doesn't need to be bleeding-edge.

But it must be ours.

Government operations need reliability, not novelty. Proven architectures, not experimental code that might hallucinate differently tomorrow. A generation-behind model offers known performance, understood vulnerabilities, and predictable costs. Better to fully control a GPT-3.5-level system than rent access to whatever OpenAI calls cutting-edge this quarter.

Hosted on government iron, with classified tiers fully air-gapped, and operational layers restricted to verified civil servants inside a secure national enclave, not on private clouds or third-party platforms.

Trained on American civic values, not political talking points, with clear, testable safeguards against bias and misuse.

Operated on a tiered access model: standard layers for day-to-day government use, and protected strata for national security, policy formulation, and judicial research.

Accessed through a secure portal on every civil servant's smartphone and computer.

A public brain, one that serves people, not shareholders.

With an audit trail.

With rights embedded in its design.

With policy outputs, not product roadmaps.

And before someone calls this authoritarian:

We already build systems the public can't log into.

We already do it for classified communications.

For weapons platforms.

For treasury forecasts and secure ballots.

For anything that demands control without surveillance capitalism stitched into the core.

The America's AI Action Plan explicitly recommends secure compute clusters for the Department of Defense and the intelligence community. Because when it comes to DoD cognition, sovereignty matters.

So the question isn't whether we can do this. It's: why not the rest of the Republic?

Why should a city planner in Des Moines rely on a chatbot whose ethical layer was tuned by OpenAI? Why should a federal judge's research pass through a moderation filter maintained by Microsoft or Google? Why should the mind of the state be leased, logged, and litigated?

Yes, building LLM.gov would cost billions, hardware, talent, energy, and time. Critics will call it waste, claiming private firms move faster and scale bigger.

But transformative infrastructure isn't born in boardrooms. DARPA seeded the internet, not Silicon Valley. NASA rewired national ambition, not defense contractors, and the race to the moon gave us the microchips that evolved into modern computing, smartphones, and today's AI datacenters. Apollo didn't just build rockets. It inspired a generation of engineers. Bold missions attract talent.

The interstate highway system wasn't left to tollbooth tycoons. Imagine corporate checkpoints at every interchange. Privatized rail left America with crumbling trains and car-dependent sprawl. Privatized AI risks a Republic thinking through corporate rules, beholden to shareholders, not the people.

A sovereign model isn't a luxury. It's the foundation for a nation that owns its mind.

James Madison warned of "factions" corrupting public reason.

He didn't foresee that those factions would be LLMs, trained on venture capital KPIs in a San Francisco startup, or optimized for ad revenue with global user data in a Silicon Valley giant.

The state's mind must be accountable to the people.

Not to shareholders.

Not to founders.

Not to foreign courts or preservation orders.

Of course, this vision comes at a cost.

The best minds in AI don't work in government. They work at OpenAI, xAI, Anthropic, drawn by obscene salaries, equity windfalls, and a seat at the table of emerging gods.

The frontier is staffed by talent we no longer train, funded by capital we no longer direct, and built on infrastructure we no longer own.

To keep pace would require more than policy. It would require resolve.

Hardware. Power. Staff. Billions in sustained investment.

A national lab not just in name, but in depth.

A mind worth calling the Republic's.

Innovation With Chinese Characteristics

Meanwhile, China is executing a simpler playbook.

It allows its “private” labs to release open-weight models (Kimi, DeepSeek, WuDao, GLM-4.5) within a domestic sandbox. Not censorship, exactly. Co-option. The Party doesn’t fear sovereignty because it already assumes it.

And inside that sandbox, a price war is quietly accelerating:

GLM-4.5 undercuts DeepSeek on input cost and slashes output tokens from $2.19 to 28 cents.

Kimi K2, backed by Alibaba, claims to beat ChatGPT at coding and charges less than Claude.

These are not hobbyist releases. They’re optimized for deployment, agentic, efficient, and priced to scale. And already, quantized versions are running on consumer-grade hardware in the hands of hobbyists.

While Kimi K2 shows off China’s capacity to scale upward, GLM-4.5 marks a pivot: downward, decentralized, and sovereign. One model proves power. The other ensures control.

GLM-4.5 was released just hours before this writing. Independent evaluations are still forthcoming. But its specs, agentic design, tiny footprint, and sub-DeepSeek pricing, signal where the race is headed.

We don’t need to mimic China.

But we do need to understand what they’ve remembered:

Cognition is a sovereign function.

And outsourcing it, however efficient, becomes, eventually, a form of surrender.

Lost Our Minds

This is the revelation of a quiet crisis.

A government that does not build its own intelligence.

A republic whose cognition is hosted, moderated, and monetized by private firms.

A nation that fears sovereign minds more than rented ones.

It would be easy to end this with dread.

To say “Oh god, we’re so screwed” and leave it at that.

But this is a diagnosis. I won’t be so fatalistic.

The Republic’s mind is on life support.

We’ve survived secession.

We’ve survived sabotage.

We’ve even survived surveillance.

We may not survive a rented mind.

Because when governance itself is piped through proprietary black boxes,

When laws are drafted with tooltips,

When policy is written by autocomplete,

When judicial reasoning is downstream from embeddings trained in Palo Alto...

What remains of the Republic?

This isn’t a theoretical risk.

It’s current infrastructure.

It’s happening now.

And what does the government offer in response?

Not a new foundation. Not a public brain.

Not even a mandate.

Just a document.

America’s AI Action Plan is not legislation.

It’s not a charter.

It’s not even an executive order.

It’s a pamphlet of intentions without obligation.

An elegant surrender disguised as strategy.

America’s AI Action Plan doesn’t refute this thesis. It completes it.

It reveals a government that sees the risk, names the need,

and chooses to rent cognition anyway.

Enjoyed this piece?

I do all this writing for free. If you found it helpful, thought-provoking, or just want to toss a coin to your internet philosopher, consider clicking the button and donating $1 to support my work.

Excellent post - thank you! You have a new subscriber ☮️🕊️🌻☀️

Very well put. The crisis is emergent. If I were to put my opinion out there, a government issued LLM (especially one built in a capitalist system that encourages freedoms) should be open and available to everyone. Each person should build their own custom LLM for their own needs, be able to pay for access to repositories made by others for things like coding languages, resources, etc. This allows the system to build itself with Americans as the foundation for what is available. Of course all of this requires a system which can track who is using the system and only allow citizens access (realID, etc.), but it would allow a system to thrive. Withholding access to the wealthy and disallowing access to the masses is the death knell of the system; access, development and useage will always be an issue until it is democratized and unleashed to the common man and taken from the hands of policy makers and think-tanks.