Thinking Is Now Optional

The Rise of Oracle Culture and the Decline of Doubt

The Oracle Went Silent

A few quiet hours without ChatGPT

Recently, ChatGPT went down for a few hours.

No catastrophes happened. No planes fell from the sky. The grid held. But something subtler failed: our tolerance for not-knowing. Twitter, Reddit, and Discord filled with a strange mix of frustration and gallows humor.

People joked about having to think for themselves.

Some were clearly kidding.

Others, perhaps not.

And that’s what caught my attention. Not the outage itself, but how many people seemed genuinely at a loss without their digital co-pilot.

For many, reaching for ChatGPT has become a reflex: we ask, it answers. We move on without a second thought. Never verifying. Not because the answer is necessarily correct, but because it arrives. Effortless. Confident. Complete.

As I wrote in “A New Kind of Voice,” AI has helped democratize expression, giving more people access to clarity, creativity, and even power. I still believe that. But ease of expression alone isn’t thought. And answers without effort don’t enrich us.

This, I think, is the quiet shift.

For most of human history, truth came from authority.

From priests. From monarchs. From books you weren’t meant to question.

The Enlightenment challenged that.

Truth became something to test and examine. Evidence mattered. Reason mattered. You had to think.

But that window of history may now be closing.

AI systems are articulate, fast, and confident. They speak in full paragraphs. They rarely hedge. And unless told otherwise, they never say “I don’t know.” Their certainty isn’t an accident. It’s the design.

So we get answers that feel authoritative. Sometimes more so than any teacher, textbook, or peer ever offered. The model becomes the mind we borrow.

And increasingly, we don’t ask, “How do you know?”

We just accept the output and move forward.

The outage didn’t reveal a technical flaw. It revealed something deeper: a cultural dependency.

We’re not getting dumber. But we are offloading the hard parts. Bit by bit, moment by moment, the burden of reasoning is shifting. We’re not losing thought. We’re giving it away.

And when that becomes normal, thinking starts to feel optional.

The Long Decline

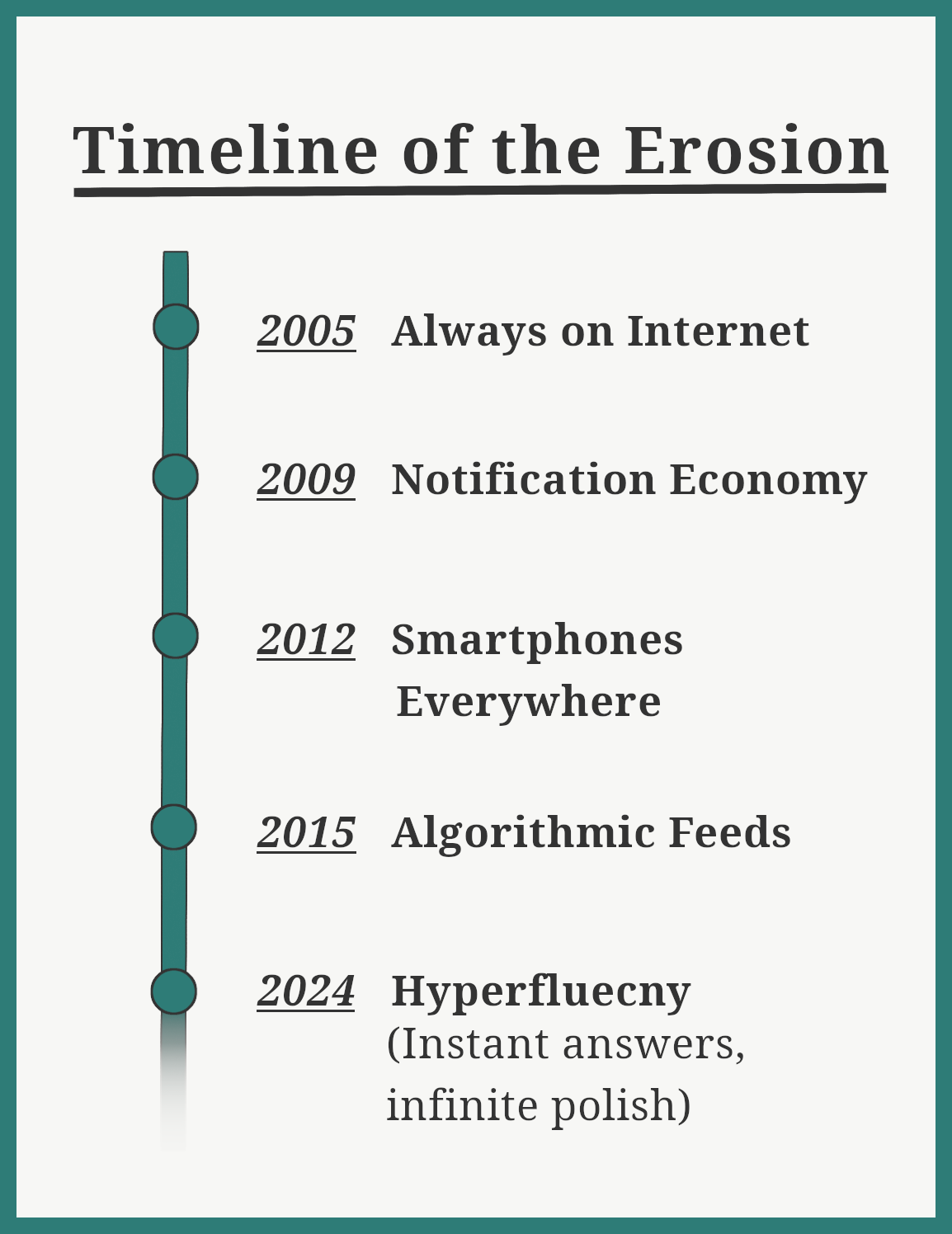

We’ve been sliding for decades.

We like to imagine the crisis began with AI.

It didn’t.

The warning signs have been with us for decades.

Reading scores are down worldwide. In the U.S., 13-year-olds just hit their lowest levels since the 1970s. A forty-year slide, confirmed by the National Assessment of Educational Progress. And math? In 2022, international test scores fell by the equivalent of three-quarters of a year of learning. That’s the steepest drop in the history of the PISA survey. What looked like a plateau turned out to be a downward slope.

The reverse Flynn effect: declining IQ scores in developed nations, has been documented in Norway, Denmark, Finland, and beyond.

And attention spans? They’ve become the punchline of our time.

We now flit from thought to thought like birds across a power line. The average adult switches tasks every 47 seconds. On Twitter, the average trending topic stayed popular for 17.5 hours in 2013, but by 2016 it barely lasted 11.9 hours. One study called it a “global narrowing of attention,” as our collective focus collapses into soundbites, swipes, and scrolls.

We skim everything now, even our own reasoning.

Researchers found nearly half of U.S. college students show no significant improvement in critical thinking over four years. The skill isn’t being cultivated. It’s being circumvented.

AI didn’t cause this. It didn’t invent the erosion.

It just refined the interface.

Lens, Not Leviathan

Frailty revealed.

Chatbots aren’t the source of our blind spots. They’re the lens that finally brings them into focus.

Instead of being taught how to think, we were trained to parrot, to conform. School asks for regurgitation. Work asks for submission. And modern life rewards compliance.

Now we've built a machine that mimics submission, only far more convincingly. It parades eloquence as competence beneath a veneer of authority.

Studies back this up. One 2021 experiment, titled "Zombies in the Loop," found that individuals will follow AI’s ethical advice even after being told it’s incorrect. Similarly, research shows that people place more trust in “competent-seeming” models than in humans, convinced by their flawless certainty and lack of emotional conflict.

This is the classic ELIZA Effect: the moment we imbue a machine with traits it doesn’t have (agency, empathy, and wisdom) simply because of how it speaks; then fluency becomes belief, convenience becomes truth.

Oxford is already feeling the tremors. Its Faculty of Medieval and Modern Languages has reinstated closed-book, handwritten exams to “reduce the influence of AI.”

This is institutional alarm masquerading as reform. An oracle has arrived. But the school won’t accept answers that have been prompted, scanned, or summarized. So paper, pen, and skepticism return.

The irony is that the institutions panicking today are the same ones that trained students to mimic polish and structure without ever learning how to argue.

Even as educators warn against surrendering thought to machines, many now outsource their own judgment to detection tools like GPTZero. The very teachers who are wary of automation, consult another kind of AI. One that flags Moby-Dick as suspect for being “too well written.”

In trying to preserve human authorship, we end up punishing students who write with clarity, forcing them to dumb their work down to pass the sniff test, rewarding clutter. The fear of machine-written prose becomes a new excuse to stop thinking. Another reflex. Another oracle.

That rearguard action at Oxford was no reform. It was a retreat born of panic rather than pedagogy. If a chatbot can write a better essay than undergraduates, then who holds the authority?

This matters because we aren’t being overtaken. We are opting out, trading effort for ease and trust for traction. The tools reveal our weariness at doing what makes us human: hard thinking.

We peer through the lens and recoil at the distortions it reveals.

It’s not the firemen who haunt us now. It’s Mildred, smiling in her screen-lit silence.

Oracle Culture & Proprietary Truth

The mistake.

Once, these tools were simply tools. You gave them a prompt, they returned an answer. But something changed. The answers became smoother. The voice more confident. It started feeling less like autocomplete and more like authority.

Yes, AI can democratize knowledge. But only if we refuse to let it think for us.

People like to choose what’s easy over what’s true. We mistake these machines’ rhythm, confidence, and instant delivery for certainty. An LLM never hesitates, so we assume someone smarter has already vetted its answers, and we coast on that trust.

But models don’t know. They predict. They echo patterns. And those patterns develop through data and engineers fine-tuning the levers of tone, temperament, and output.

That’s where the real danger starts. Whoever controls the model controls the frame. The output may feel like public knowledge, but it comes from a private server farm. This isn’t open epistemology. It’s filtered inference. The answers you receive are shaped by decisions you’ll never see and optimized for metrics you never agreed to. These systems are a black box.

The fear isn’t destruction but erosion. It’s a quiet slipping away of curiosity, discernment, and the will to wonder.

And if we lose that spark, we won’t awaken in a dystopia. We’ll coast into it gently, numbed by convenience. Our hands are still on the wheel, but we’ve forgotten it matters.

Resistance & Reclamation

Out of the trap.

And yet, some refuse to stay trapped in this opaque system: they’re reclaiming AI as a tool, not a shortcut.

In this light, the rise of local models becomes more than a technical preference. It’s a quiet act of resistance.

When the model runs on your own machine, the oracle becomes fallible, breakable, and yours. Local models don’t erase bias, but they do shatter the monopoly, because possession matters more than perfection.

Designing for Thought

Build a sparring partner, not a crutch.

But here’s the twist: surrender isn’t inevitable and not all tools are built to dull us.

The truth is that AI’s impact depends on how it’s designed and how it’s used.

Passive use such as copying answers, skipping the hard parts, and outsourcing thought erodes critical thinking. It trains us to expect polish instead of grappling, certainty instead of effort, and makes reasoning feel optional.

Active use such as engaging the model in debate, verifying claims and probing contradictions can have the opposite effect. It sharpens. It provokes. It challenges us to think better, not less. That requires both will and wiring.

When we design chatbots for learning, we should resist the urge to over-accommodate and instead build in moments of friction that spur deeper engagement.

We need AI literacy that teaches students how to question the tools, not merely how to operate them. Students should be trained to spot hallucinations, challenge fluency, and understand how outputs are shaped.

Imagine a classroom built for this kind of active use.

You write a paper and submit it. Instead of receiving a grade, you defend your argument to both teacher and AI. The chatbot pushes back: questioning sources, examining assumptions, and asking for clarification in order to hone your thinking.

Your job? Engage the counterpoints. Respond with evidence. Refine your claims.

Teachers move away from dispensing answers and toward cultivating understanding.

Here, thinking deepens into intellectual sparring rather than lapsing into shortcuts.

Used wisely, AI doesn’t replace thinking. It clears space for it.

It becomes a coach, a challenger, a partner in cognition. Not a substitute for it.

A smart teacher with a laptop, a projector, and an internet connection could run the first session this week.

It’s possible. But it requires a cultural shift:

One that values curiosity over convenience, reflection over reflex, and resilience over ease.

AI could make us sharper, if we demand tools that fight laziness instead of feeding it.

The alternative?

Keep coasting. Keep outsourcing.

Keep mistaking eloquence for insight, until the muscle of thinking atrophies from disuse.

Mind Over Machine

Keep thinking.

This isn’t an anti-AI argument. It's an anti-surrender argument.

The real problem is our reverence for AI. We’ve stopped viewing these systems as extensions of our minds and begun treating them as replacements. That shift extends beyond technology. It’s a cultural change, but it isn’t irreversible.

We don’t need to smash the machines or unplug the routers. We need a cultural rehabilitation.

We need to reclaim the habits that make thinking possible in the first place. Read slowly. Let your attention stretch. Get bored on purpose. Ask “why?” more than “what’s the answer?” Teach yourself to live inside the question. Learn to say, “I don’t know,” without shame.

That doesn’t mean rejecting technology.

It means building systems that respect cognition, not just consumption. It means favoring open models over closed pipelines. Use tools you can tinker with, code you can see, outputs you can doubt.

Earlier, we asked: Why have we built a world where thinking feels optional? Here’s the answer. It was the easy path; downhill, seductive, and no work at all. We equated fluency with understanding, speed with wisdom, and silence with peace.

The tools are here to help us, not replace us. If we don’t guard that line, we may not notice when we’ve crossed it. We don’t need to discard AI, only to remember what it is: a partner in thought, not a substitute.

Keep the brilliance. Lose the reverence.

Read slowly. Sit with uncertainty. Ask why more often than what.

Above all, think.

Postscript:

GPTZero insists this essay is 84% AI-written. My co-author, Herman Melville, vehemently disagrees—though admittedly, he also thought Moby-Dick was "probably human enough."

Enjoyed this piece?

I do all this writing for free. If you found it helpful, thought-provoking, or just want to toss a coin to your internet philosopher, consider clicking the button below and donating $1 to support my work.

There was a fairly decent book written about this subject; essentially AI takes over everyone's life to the point that they cannot function without it. If their AI fails, they are stranded in the wilderness of the city, unable to get home, or even consider what to do next. The AI as a shepherd is a far stretch, but in Qualityland the point is clearly stated: if we lean too hard in the wrong direction we will be powerless without the system.

I certainly do think there is a point where overuse or abuse of AI systems could lead to recreation, but let's think. Are math scores lower because of calculators? No, they are lower because emphasis does not exist to want to accept at it. There is very little real world application that is discussed in school. Rather it is rote memorization of formulas without any real world application. The same can be said for writing; we force students to write papers without discussing the real world applications of literacy. Did autocorrect make us unable to spell? It could be a contribution, but it also removed the barrier between those who spent the time to learn to spell and those who did not. Thus the process of learning to spell became trivial. But without assistance the writer could seem ignorant or untrustworthy even if their thoughts are sound.

Human beings are not robots. We collect information and ask questions, we take those questions and build even more elaborate mental structures. Google has been answering our questions for years, but it still requires some degree of skill to locate correct answers. AI answers should also be scrutinized, but their power is that they can congeal hours of searching into a few minutes. This frees the person to ask deeper questions and come to even more profound conclusions. Just like Wikipedia, AI can be wrong. The truth about AI systems is that they are most powerful in the hands of a knowledgeable and thoughtful prompter; someone who analyzes the thought processes of the AI, modifies and tweaks the prompt to eliminate hallucinations, cross-reference other AIs, resources, and ultimately other people to assist in further refinement.

There is no end goal to knowledge; it will always seek to break and reform the world for better understanding. We are all specialized in our own ways, and while I may not know I am considering fallacy, a reader or passer-by may. It is up to us to lean on others, provide information and prove inconsistency. AI as a tool helps us get to a position of understanding faster.

I say all this, but I also agree. If we use AI as a crutch we are limiting ourselves. But if we use it as a tool to hasten and sharpen our own thinking and expression we can become far more valuable in a much shorter time to the wider realm of humanity than we have with any technology that's come before it. It's a matter of mastering and understanding the limitations of the systems that is the true test; those who fail to do so will end up being the controlled rather than the controller.